Fifty Years Later, Moore's Computing Law Holds

Politics / Technology Nov 28, 2016 - 03:18 PM GMTBy: STRATFOR

By Matthew Bey : Gordon Moore, co-founder of Intel Corp., published his now iconic article — Cramming More Components into Integrated Circuits — in the journal Electronics on April 19, 1965. In this paper, Moore observed that the number of transistors fitting on a computer circuit board had roughly doubled each year. A decade later the time-scale was revised to 18-24 months and dubbed "Moore's Law." Following this principle, a computer purchased today would cost about half the price in two years. Processing is now down to one-sixtieth the cost it was a decade ago.

By Matthew Bey : Gordon Moore, co-founder of Intel Corp., published his now iconic article — Cramming More Components into Integrated Circuits — in the journal Electronics on April 19, 1965. In this paper, Moore observed that the number of transistors fitting on a computer circuit board had roughly doubled each year. A decade later the time-scale was revised to 18-24 months and dubbed "Moore's Law." Following this principle, a computer purchased today would cost about half the price in two years. Processing is now down to one-sixtieth the cost it was a decade ago.

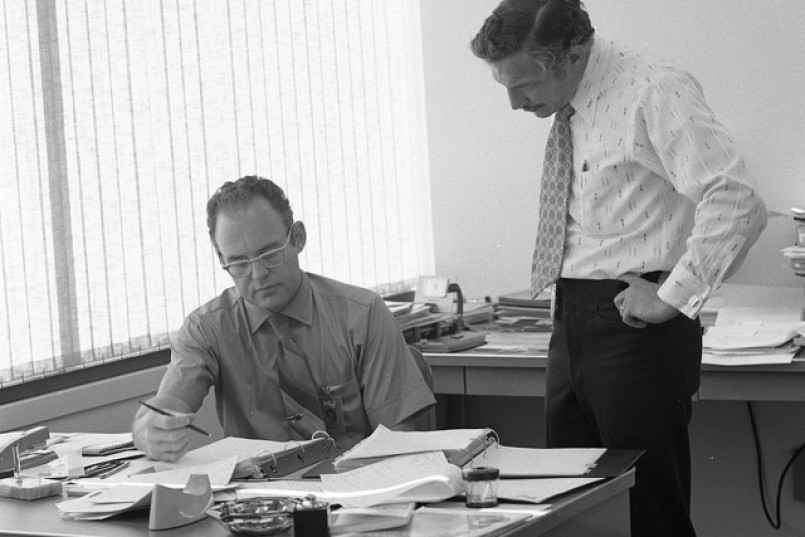

Intel co-founders Gordon Moore and Robert Noyce meeting in Intel's offices in 1970. (INTEL FREE PRESS/Wikimedia Commons)

The operation of Moore's Law over the past 50 years has changed the world in enormous ways. The resilience of his observation has been equally impressive. Within a short time of his formulation of the law, it became the core business model of semiconductor and fabrication companies as well as the foundational logic driving long-term strategy for corporations in the microchip and semiconductor business. The prescience of Moore's vision is most clear in the second paragraph of his 1965 paper, which forecasts many of the devices exhibited at the January 2015 Consumer Electronics Show in Las Vegas:

Integrated circuits will lead to such wonders as home computers — or at least terminals connected to a central computer — automatic controls for automobiles, and personal portable communications equipment. The electronic wristwatch needs only a display to be feasible today.

In 2015, not only does the world have automatic controls for automobiles but also practical self-driving technology. Cellular phones and other mobile devices are now the most profitable segment of the electronics industry and the economic driving force maintaining the pace described in Moore's Law. Cloud-based computing is becoming mainstream for a wide range of applications. This month, Apple made headlines by selling over a million Apple Watches in a few hours.

This exponential growth in processing has accelerated technological progress and forced every country to adapt to change more quickly than at any other point in history. This is true not only for computers and digital information, but for essentially everything that exists in today's world. Unlike the Neolithic Revolution 12,000 years ago or the 1760-1840 Industrial Revolution, today's digital world touches every part of life and nearly every country on the planet. The developing world, too, is gaining access to advanced technologies more quickly. It has already transformed nations like Rwanda into potential regional communications technology hubs. Now, more than ever, technology is geopolitical.

From the 1950s to Now

The rapid development and innovation in U.S. and later Japanese semiconductor and computer technology gave the United States and its allies a powerful advantage over their Soviet competitors. Throughout most of the Cold War, the Soviet Union was on average a few years behind the Americans and Japanese. Because of the exponential growth of technology, however, this lag meant that it was more expensive for the Soviets to produce what was typically an inferior computer chip.

The differences between the Soviet and U.S. technology sectors were because of factors not considered by Moore's Law. Instead, it was the structure of research, development and eventual application in both contexts. The competition between the two powers throughout the Cold War provided a strong incentive for both governments to fund and develop technology. In the United States, NASA, the National Science Foundation and the National Institutes of Health invested a huge chunk of federal funding in innovation, often defense-related. But by the 1960s, and into the 1970s, defense spending fed back to the development of control systems and computers. This was true in the Soviet Union as well.

Unlike the United States, however, the Soviet Union's research and development was almost exclusively geared toward the defense industry. Much like the previous German model, the Soviet model for science was full state control of funding, research and even the scientists and technologists themselves. This state-centered model left little room for expansion into consumer applications. In the end, the Soviet Union maintained an even footing in specific defense-related industries, but was far behind elsewhere.

In the U.S. capitalist model, by contrast, the focus of the domestic economy was the consumer market. Many of the innovations originally developed for defense purposes were quickly integrated into consumer goods and services thanks to more diverse capital allocation. IBM, for example, was responsible for over two-thirds of the world's computers in the mid-1960s, but much of their initial revenue was generated from the development of the B-52 bomber's computer systems and other air force technology. Defense spending also bankrolled a significant part of the initial development of integrated circuits in the early 1960s.

The space race against the Soviet Union in the late 1960s also helped the U.S. military consume over half of the world's integrated chips. However, those technologies eventually become commonplace in consumer electronics. By the 1980s the United States had grown into the world's premier industrial economy and helped U.S. companies pioneer innovation in areas such as home computing. The United States had also come to dominate the world's computer and semiconductor markets.

In Japan, most innovation was done in the context of a centralized government system that allowed Tokyo to exploit lower labor costs to produce the components more cheaply than the United States. But, unlike the United States, it did not allow new developments to rapidly extend to other adaptations.

The foundation laid by the U.S. system of innovation brought about perhaps the two most important revolutionary technological events of the post-Cold War era. In the final days of the Cold War and the period directly after the Soviet collapse — brought on in part by the lack of a consumer-oriented industry — the United States unveiled both the Global Positioning System and the Internet. These Cold War technologies reached the consumer market through the 1990s. The U.S. also spun off commercial satellite launches, paving the way for the privatization of space launches and space-based technologies.

The Internet — and machine-to-machine connections more broadly — led to a number of crucial developments. The most important of these was the online interface that gave individuals access to almost any information needed. By using computers to communicate, the Internet enabled people to organize and connect without physically meeting, far beyond the scope of a single telephone line.

Risks of Exponential Growth

The growth of computing, laid out in Moore's Law, was not without its challenges. It made the theft of information more feasible and prevalent than ever before. At both the individual and corporate level this fundamentally exposed once stable concepts of privacy and intellectual property. At the national level it brought about concerns of espionage and, for closed governments such as China, the dissemination of intellectual ideas. These concerns are at the center of the European Union's debate with Google on concepts like a person's right to be forgotten or the dominance and integration of Google, or previously Microsoft, into everyday life.

Cyber "warfare" has also manifested in relations between states and between state and society. The revelation of the extent of the National Security Agency's monitoring of communications has raised tensions between the United States and countries such as Brazil. In April, University of Toronto researchers also uncovered code originating from China being used to hijack personal computers in order to unleash distributed denial of service attacks to take down websites Beijing opposes. Hacks also extend to asymmetric attacks where small groups can attack entire corporations.

Because of the startling speed of innovation, risk has consequently escalated over a relatively short period. Not all outcomes, however, have been negative. Processing by "Big Data" has enabled sophisticated modeling of genomes, petroleum reservoirs, supply chains and logistics, as well as enabling financial engineering and medical research. Many of the breakthroughs in these fields simply would not be possible today, or at least as frequent, with weaker computer processing.

Moving forward, the world will become increasingly dependent on computers. Implementing and securing smart technology will likewise become a fundamental concern. The last few years have seen data breaches at a number of companies. Smart grids, automated and additive manufacturing and remote sensors are all going to become more important in the future, embodied in what has come to be called the "Industrial Internet," "Internet of Things" or "Industry 4.0."

The End of Moore's Law

Ultimately, Moore's Law is not an unbreakable law. It is merely an observed trend that has continued for far longer than Gordon Moore himself even expected. The question now is whether the trend will continue and if it is even worth investing the resources to maintain.

The early rapid development of the semiconductor and computing markets was originally funded and financed by the defense sector. Consistent government contracts meant consistent investment. Once the defense industry's market gave way to consumer electronics, however, desktop computing power became the main source of income that drove profits and incentivized maintaining the trend line set by Moore's Law. Eventually, of course, desktop computing power reached a point where the consumer market did not need to upgrade as frequently. Subsequently, the market's growth began to slow down. In time, the same became true for laptops.

The profit margins just did not exist. Today mobile computing in devices like tablets and cellphones represents the driving force behind innovation and continued investment into more powerful, smaller and efficient computer chips. By the end of the decade, tablet sales may be comparable to personal computer sales. The cellphone market, too, will be strong.

At some point, like personal computers and laptops before them, the need for growth in computing power for tablets and cellphones will no longer see corresponding increases in the buyer's user experience. Consumers will no longer be as interested in paying as much money for the latest iPhone, iPad or Samsung Galaxy.

Looking beyond mobile electronics, the question becomes whether the next phase will require development to progress as rapidly. Most of the growth in demand may emerge from cloud computing, which does not rely as much on physical size or remote sensors, which do not need more computing power than regular computers. There will continue to be niche applications — such as video games — but it is questionable whether their market share can ever approach the beast that is the personal computer and cellphone market. Progress on Moore's Law will inevitably slow down, and could do so quite soon, possibly even by the end of the decade. The market for existing technology will still be there, but it will not drive innovation into more powerful chips.

The industry is now reaching a point where increases in materials and fabrication technology are leading to rapid growth in design costs as the actual transistor itself gets smaller and smaller. These costs are now rising more than the value of the end product, meaning the exponential growth predicted by Moore's Law could slow because it is simply not profitable. In an early 2015 survey, over half of semiconductor industry leaders said they believe that Moore's Law will no longer be viable below 22 nanometer transistors. Currently, investors are exploring the 10 nanometer (or less) transistor. Even the existing technology tracks will eventually reach their physical limitations as nodes become smaller.

There are of course a number of innovative technologies that could be put in place to extend the shelf life of Moore's Law. These include using spintronics technology, using 2D materials or scaling nodes and gates vertically. They also include the potential for radically different forms of computing such as quantum computing. These innovations, however, are only justifiable to mass produce quickly if the demand for their benefits translates to real world applications. Massive budgets are required to replace semiconductor fabrication equipment every 18 months or so.

In the end, Moore's Law has revolutionized technology in the computer age. It is quite amazing that it has lasted 50 years and counting. But the law is merely an observation, and is now facing perhaps its most fundamental challenge.

"Fifty Years Later, Moore's Computing Law Holds is republished with permission of Stratfor."

This analysis was just a fraction of what our Members enjoy, Click Here to start your Free Membership Trial Today! "This report is republished with permission of STRATFOR"

© Copyright 2016 Stratfor. All rights reserved

Disclaimer: The above is a matter of opinion provided for general information purposes only. Information and analysis above are derived from sources and utilising methods believed to be reliable, but we cannot accept responsibility for any losses you may incur as a result of this analysis.

STRATFOR Archive |

© 2005-2022 http://www.MarketOracle.co.uk - The Market Oracle is a FREE Daily Financial Markets Analysis & Forecasting online publication.